Visual Generative Artificial Intelligence (vGenAI) has arrived, and as lecturer Anton Nemme aptly stated: “It’s here to stay.” Large language models such as Bard and ChatGPT have crept into our lives, but their visual counterpart, vGenAI, is only just beginning to gain traction. In this piece, we’ll explore the use cases, concerns, and ethical dilemmas that vGenAI presents to educational institutions, from UTS to the Year 7 Science class that I teach.

Being mindful of bias

“What does a scientist look like?” I pose to my Year 7 students. With Stable Diffusion, we quickly conjure up a gallery of scientists, only to discover that – according to this AI – scientists are predominantly middle-aged men wearing glasses. The crestfallen expressions on the female students’ faces led to a two-part lesson unravelling gender stereotypes and discussing how GenAI models were trained. Though this example may seem isolated, it has prompted many researchers and academics to ponder the broader implications: to what extent will the biases, both realised and unrealised, shape our world?

Using vGenAI for iterative design and innovation

Within UTS, there’s a wide range of understanding and eagerness to delve into vGenAI. Academic and interior architecture course director Samantha Donnelly is keen to harness it as a learning tool within the Interior Design Course. She sees immense potential in using vGenAI for iterative designs, enhancing students’ ability to critically analyse interior design – a key CILO (Course Intended Learning Outcomes) in many of her courses. But Samantha’s enthusiasm doesn’t stop at the practical applications; she’s also eager to sink her teeth into the ethics of vGenAI images, a facet of visual GenAI that’s, well, rather contentious. But more on that later…

Let’s not overlook the seasoned users of Computer Aided Design (CAD), who have been harnessing generative technologies long before vGenAI became the talk of the town. Anton Nemme, PhD student and Lecturer of Advanced Manufacturing, is one such expert. While he acknowledges that specific tools such as DALLE-2 and MidJourney are gaining attention, he’s quick to point out that his field has been at the forefront of innovation for years.

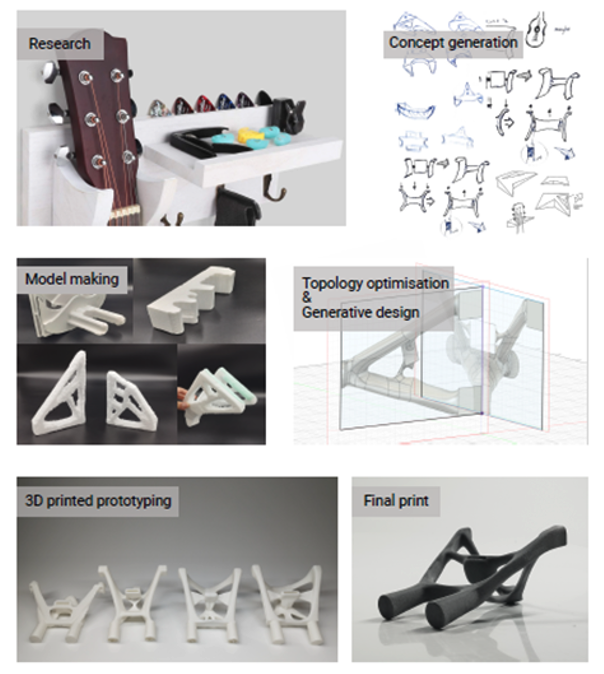

AI-powered form generation processes like Generative Design and Topology Optimisation are already integrated with mechanical CAD applications, allowing students to produce exciting and efficient 3D printed structures. 2nd and 3rd year Product Design students are already exploiting those processes.

Anton Nemme

Collaborating with vGenAI – a bridge between creativity and ideation

Wajiha Pervez, an experimental textile designer, artist, PhD student, curator, and academic, specializes in exploring materials and design processes in the fashion industry. She emphatically states that “AI is [now] part of the creative process” and marvels at the boundless opportunities for “co-creating” to democratise the industry. Brimming with optimism, Wajiha sees vGenAI as a means to not only make business aspects more accessible to small-scale production but also to conserve time and resources. She points to sampling and sizing as two particularly wasteful aspects of fashion and design. Tools such as MidJourney are streamlining these processes, making them less resource-intensive and specialised. Read more in her case study, recently added to our Artificial Intelligence in learning and teaching resource collection.

Senior Lecturer, academic, and architect, Dr Mohammed Makki, has worked with Dave Pilgrim to adeptly harness visual GenAI into a master’s course, to yield some remarkable results. Like Wajiha, Makki sees AI as a collaborator and co-creator, a bridge between creativity and ideation for his students.

Dr Makki has crafted a streamlined workflow using GenAI tools such as ChatGPT, MidJourney, and Stable Diffusion to co-create visualisations. Witnessing that ‘eureka’ moment in his students is proof enough of its efficacy as a teaching tool. But he cautions that it is not as simple as entering a prompt and receiving a product. Interventions at specific points are needed to develop robust visualisations. He also observes that users form a unique relationship with their AI tool, resulting in different outcomes and a distinct ‘flavour’ even from the same starting point.

Visual Communication (VISCOM) Course Director Dr Andrew Burrell and Lecturer Monica Monin offered a critical perspective on the recent buzz surrounding vGenAI. They assert that “students, for the most part, are not interested in replicating someone else’s style.” Concerns about academic integrity within the VISCOM program are minimal, as students are more driven to cultivate their own unique style and flavour. As discussed at the DAB and IML collaborative workshop, Andrew and Monica initiated conversations about vGenAI early in their courses, fostering both critical and creative practice. Andrew sees vGenAI as a tool that becomes part of a much wider toolset, enabling students to extend their intrinsic creativity.

Removing the aura from vGenAI

Navigating the world of vGenAI is akin to exploring a new frontier without a map – exciting, promising, but fraught with pitfalls and unexpected turns. The million-dollar questions of royalties, recognition, and copyright remain elusive, leaving us to grapple with legal and ethical complexities. Among the creatives I interviewed, vGenAI is seen as a catalyst for creativity and an extension of what’s already there.

The age-old debate between stolen and inspired continues to perplex, now with an algorithmic layer that makes the waters not just muddy but downright swampy. The potential for vGenAI to transform industries is currently central to many discussions, but so too are the ethical dilemmas and uncertainties that accompany it. As we ponder the future of creativity, we must also consider the responsibilities and challenges that come with this innovative yet contentious tool. As Andrew Burrell and Monica Monin aptly put it, “we need to take the aura away for it”.